This article first appeared in BrickJournal Issue 33, 2015

The perils of Dead Reckoning!

It is common for many teams when competing in Robotics competitions to rely heavily on dead reckoning. But sometimes, this is not the best option.

What is Dead-reckoning?

Dead reckoning is the process of driving your robot around without the aid of external sensors. The theory goes, if you know where you start, and you know exactly how far you are telling your motors to travel, then you can calculate *exactly* where you’ll end up after a move.

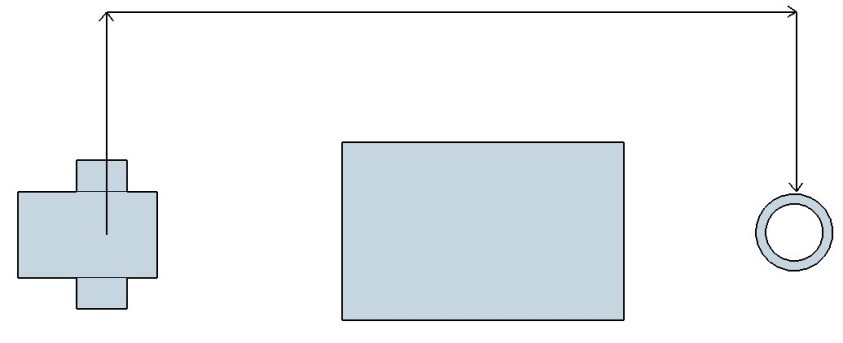

Well that sounds great in theory. Suppose I’ve got the following challenge. Navigate the robot around the obstacle and stop at the ring.

Providing I line up my robot perfectly straight, this could be a possible solution

- Turn 90 degrees left

- Drive Forward

- Turn 90 degrees right

- Drive forward

- Turn 90 degrees right

- Drive forward

In the robots mind, what it is effectively doing in closing its eyes, driving blindly while following its instructions, then at the end it opens it eyes and hopes that it is in the right place. In reality there are often things that can go wrong.

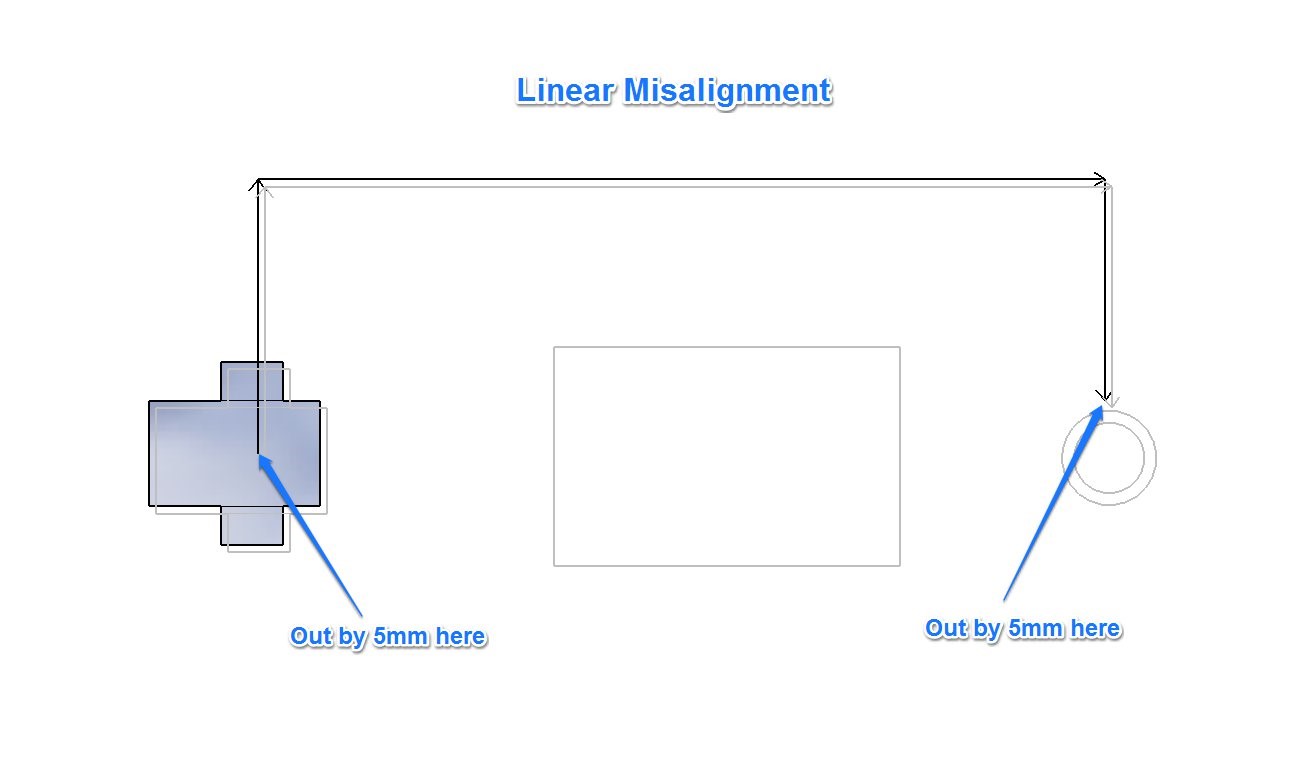

Misalignment

Looking back at the above example, let’s suppose that in the rush of the competition countdown clock, I don’t line up my robot as well as I wanted. When placing, I put it down 2 degrees counter-clockwise from what I had intended. Two degrees isn’t very much at all, and to the human eye is probably not even noticeable, but because the robot doesn’t realise this misalignment, it will just continue on its predetermined path. However, it can lead to significant errors in where the robot will finish. This is called Rotational Misalignment.

A similar problem, Linear Misalignment is where to robot is placed straight, but perhaps just a few millimetres away from its ideal starting position. The end error isn’t as bad, but can still have an effect of your robots position.

Other Factors

There are a few other factors that can impact your robots final position if it is just using dead-reckoning.

- Friction of the surface: Even though you may have told your robot to spin the wheels so that it travels exactly 500mm, in reality a surface that has more friction will result in the robot not travelling as far as you expected.

- Weight of the robot: Just like the friction of the game surface, the weight of the robot will impact how far it travels.

- Obstacles along the way: If your robot accidentally clips the obstacle along its path, that will upset which way the robot is heading.

- Hubs moving within the tires: Even though you may think of the wheels as being a single piece, in fact the hubs can move slightly inside the tires as they drive along.

You can see that after a few runs, the hub does not line up exactly with the tire.

While each of these factors are small by themselves, when many movements are combined, all the little errors are added up to result in quite a large error.

What to do?

Dead reckoning can be useful, if it is used sparingly and only over short distances. To improve on dead-reckoning, you’ll need to add in sensors. I’ll look at a few basic ways to combine the Colour Sensor with dead-reckoning to get a far more reliable robot.

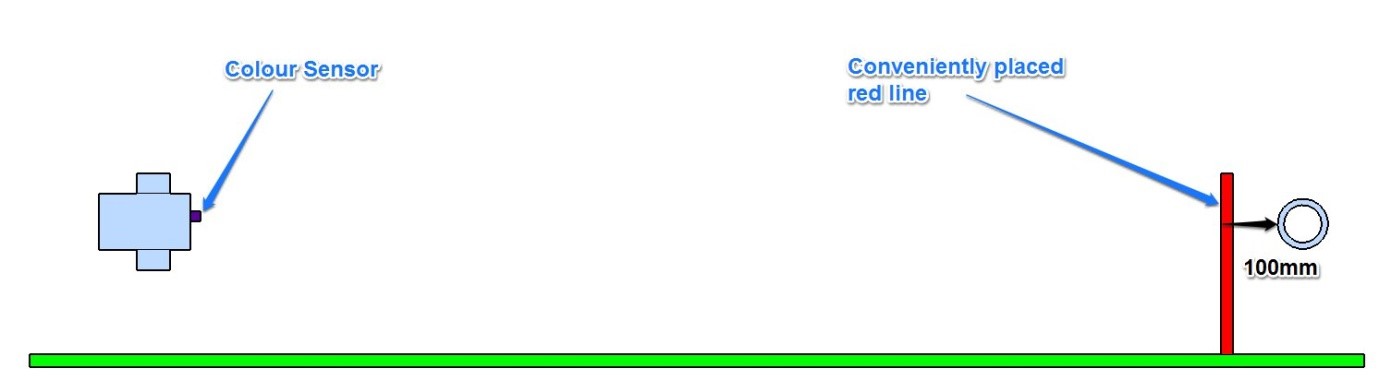

Challenge 1: Drive your robot up to the ring.

In this challenge, the ring is quite a large distance away. We already know that friction of the surface, weight of the robot and tire slippage will affect how the robot performs, so while we could measure the exact distance and calculate from the tire circumference, there is a very good chance we’ll be out by a small amount. What is handy to know is that the ring is 100mm away from a very conveniently placed red line.

Here is how we’ll approach this problem.

While we’re not sure we could be very accurate driving up to the red line using just our Motor commands, we can be sure that we could use the Colour sensor to know *exactly* when we get there. Once we know we’re at the red line, we could use dead reckoning to just do the last 100mm.

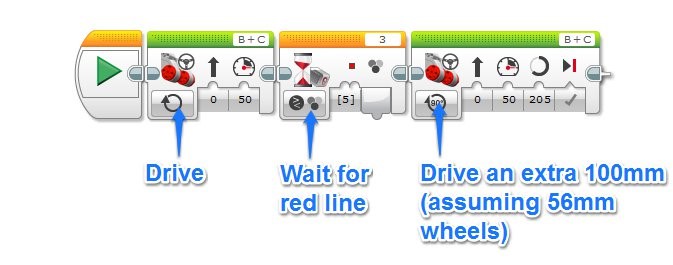

This is how it would look in the EV3-G language.

Challenge 2: Lining up.

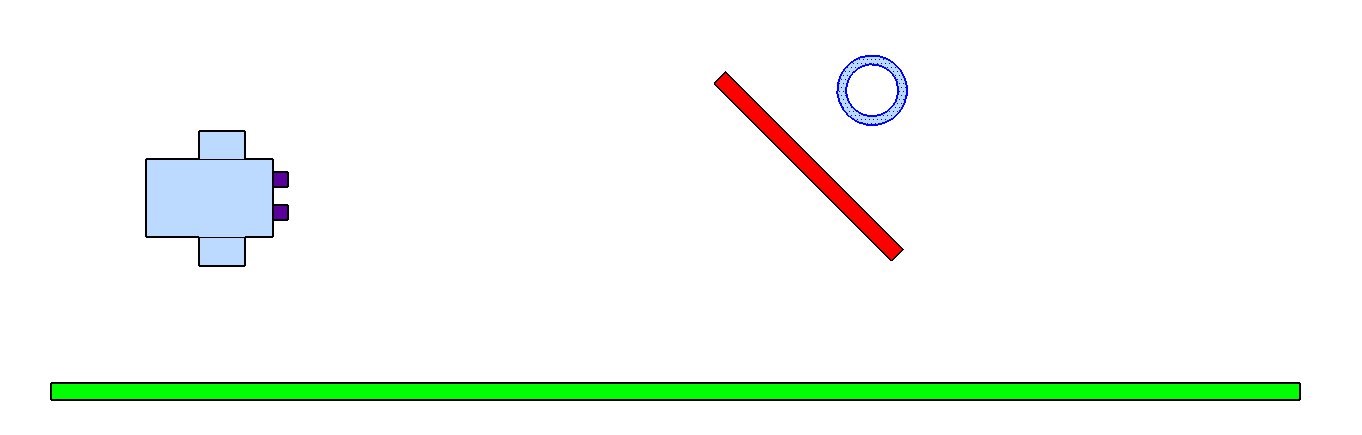

Challenge 1 works well if you know you are travelling in a straight line to your object, without any turns. What would happen if the challenge looked like this?

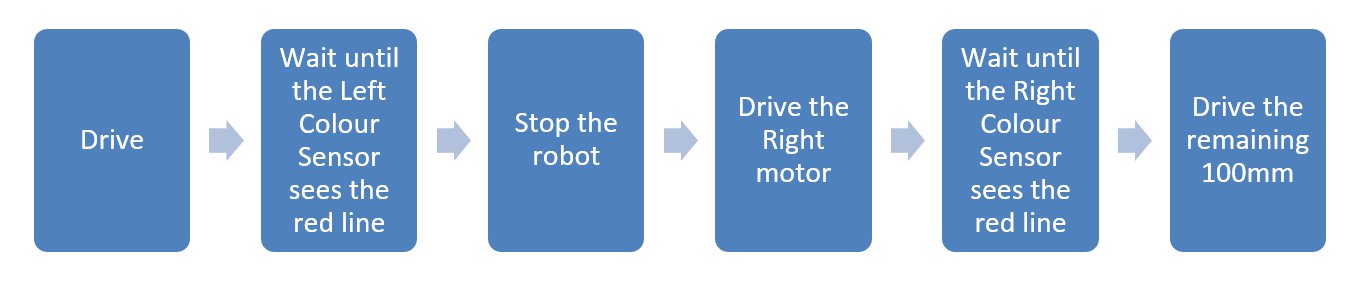

We can’t just turn and head to the ring otherwise we’re at the mercy of Rotational Misalignment. A better course of action would be to head directly to the red line, and then use the red line to orient ourselves towards the ring. Our new plan would probably now be along the lines of this

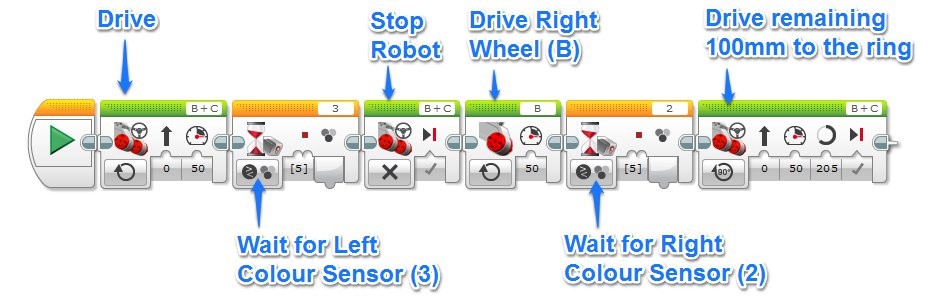

This is what it would look like in the EV3-G programming language.

What Next?

This is just the start. Can you think of other ways you can use sensors to get close to your targets, and then use Dead-reckoning to do just the last little bit?

Dr Damien Kee has been working with robotics in education for over 10 years, teaching thousands of Students and hundreds of Teachers from all over the world. He is the author of the popular “Classroom Activities for the Busy Teacher” series of robotics teacher resource books.

You can find more information at www.damienkee.com or contact him directly at damien@damienkee.com